Multi-Web Server Deployment Behind a Load Balancer on OpenStack

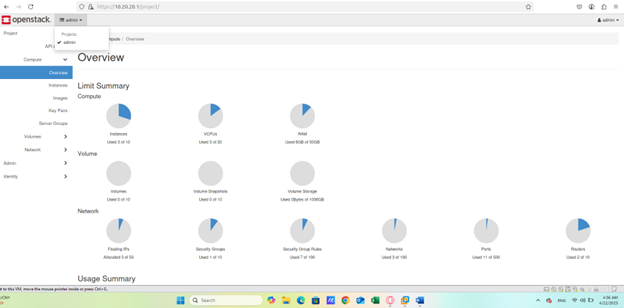

Overview

This project demonstrates the deployment of multiple web servers on OpenStack MicroStack, each hosting a personal portfolio website, managed through a load balancer VM running NGINX. The entire setup operates on a local private network with optional public exposure via Ngrok.

1. Installing MicroStack

MicroStack was installed on an Ubuntu VM using Snap, providing a lightweight single-node OpenStack cloud.

After initialization, core services (Keystone, Nova, Neutron, Horizon) were verified, and access was confirmed at http://10.20.20.1.

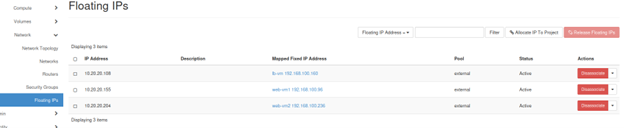

my-private-net with attached subnet and router.2. Private Network and Floating IPs

A private subnet 192.168.50.0/24 was configured, connected to a router, and linked to the external gateway.

Floating IPs were then allocated and attached to each VM (web-vm1, web-vm2, lb-vm) to allow external access when needed.

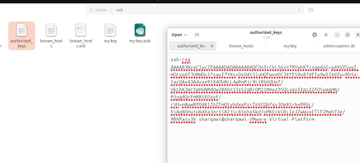

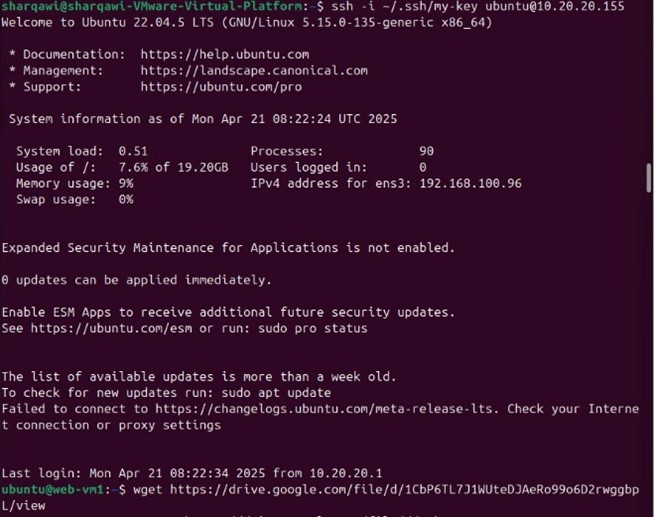

3. VM Creation and SSH Access

The Ubuntu cloud image was uploaded to OpenStack, and instances were created using:

sudo microstack.openstack server create web-vm1 --image Ubuntu --flavor m1.small \

--network my-private-net --key-name mykey --config-drive true

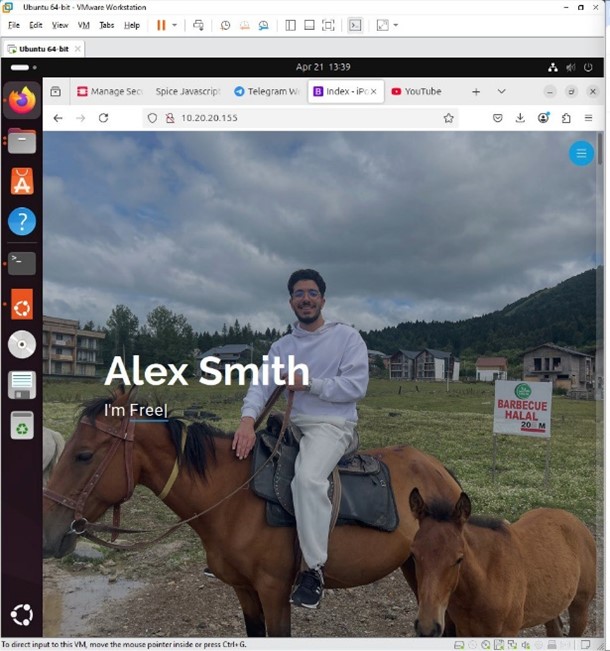

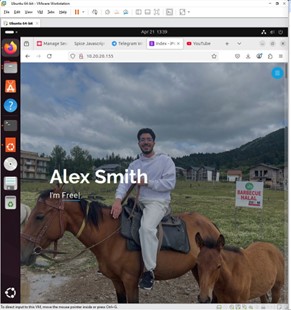

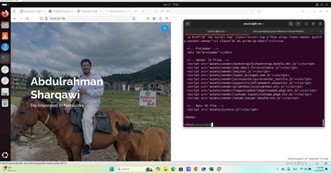

The --config-drive true flag ensured proper SSH key injection. Each VM hosted a portfolio website served via Apache.

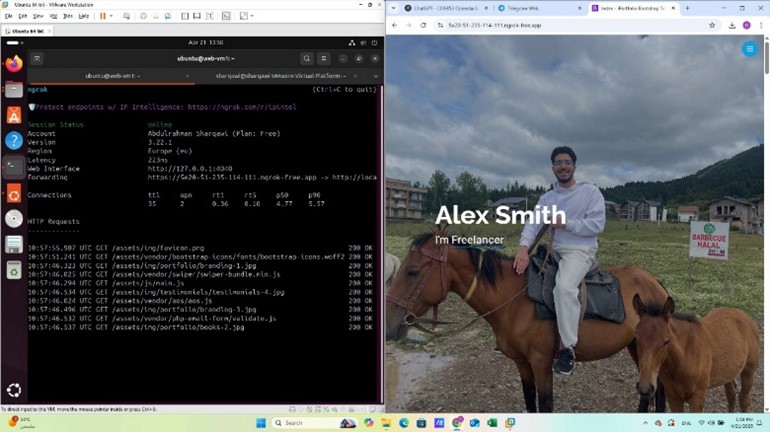

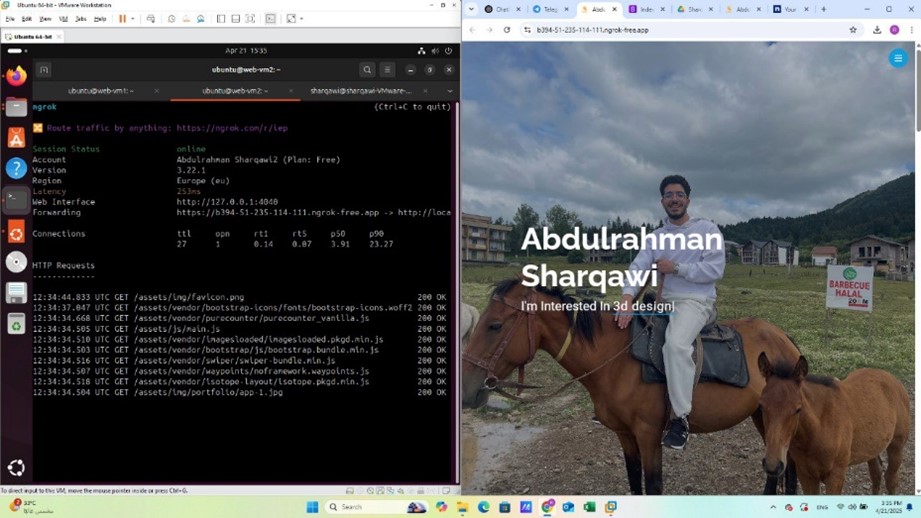

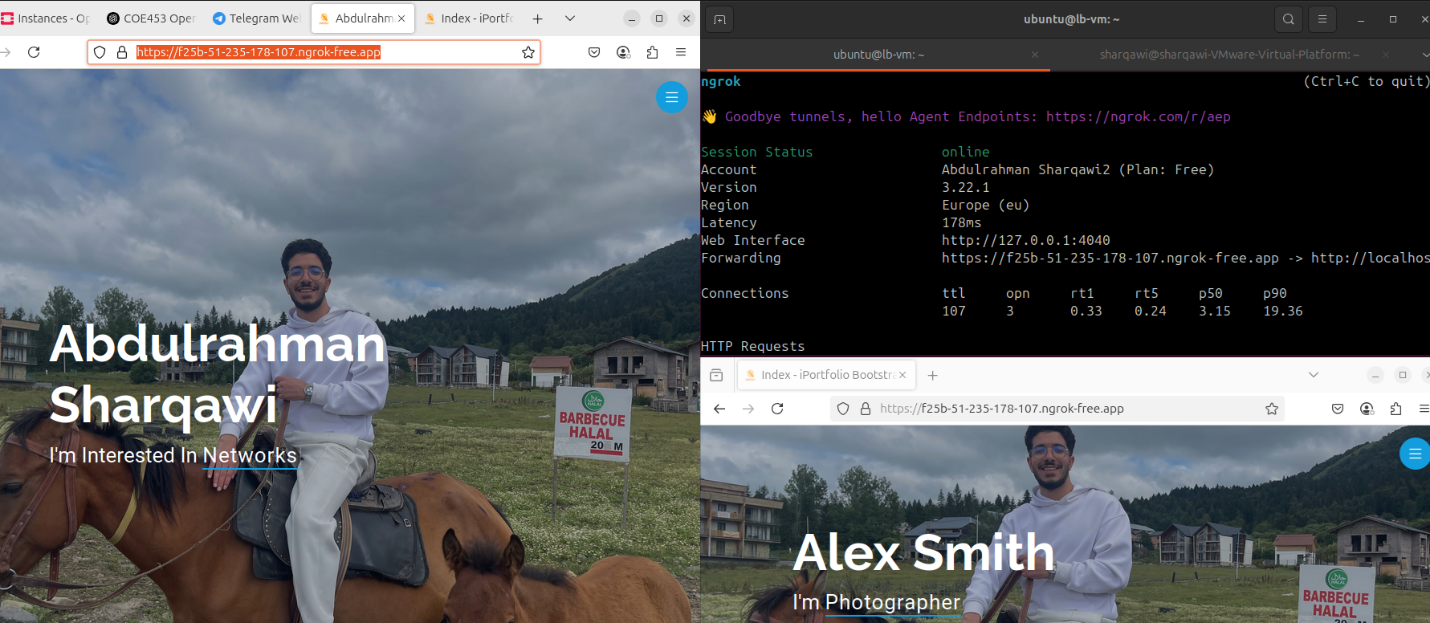

4. Public Access via Ngrok

Ngrok tunnels were configured to expose port 80 for external testing, allowing each web VM to be accessed through temporary HTTPS URLs.

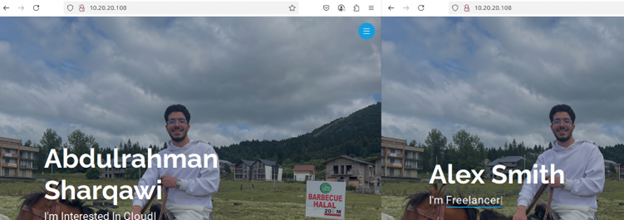

5. Load Balancer Configuration

The lb-vm instance was configured as a load balancer using NGINX reverse proxy.

Two backend servers (web-vm1 and web-vm2) were added to the upstream pool with round-robin load distribution.

upstream portfolio {

server 192.168.100.11;

server 192.168.100.12;

}

server {

listen 80;

location / {

proxy_pass http://portfolio;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

}

6. Validation and Results

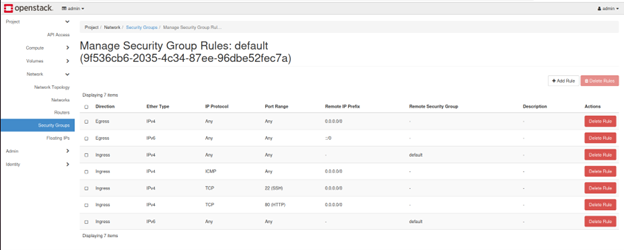

Accessing the load balancer’s public Ngrok URL alternated between the two portfolio sites, verifying the NGINX round-robin functionality. Network and security group configurations ensured full internal and external access.

7. Extended Validation & Demonstration

To confirm system functionality, each component was validated both internally and externally.

The load balancer (lb-vm) successfully distributed requests between web-vm1 and web-vm2,

while Ngrok exposed the deployment publicly for testing from outside the KFUPM network.

Project Information

- Category: Cloud & Edge Computing

- Course: COE 453 – Cloud and Edge Computing

- Instructor: Dr. Muhammed Afaq

- Technologies: OpenStack MicroStack, Ubuntu, Apache, NGINX, Ngrok

- Students: Abdulrahman Sharqawi, Mohab Hussien, Basil Alashqar

- Documentation: Download Full Report (PDF)

Key Takeaways

- Built and configured a complete OpenStack environment locally using MicroStack.

- Deployed multiple Ubuntu instances hosting separate portfolio websites.

- Set up NGINX reverse proxy as a functional load balancer between VMs.

- Achieved global access through Ngrok tunnels for demonstration.

- Learned fault isolation, floating IP, and cloud networking concepts.

Conclusion

The project successfully implemented a hybrid cloud deployment model on OpenStack, combining on-premise virtualization with public cloud accessibility. It provided hands-on experience in distributed deployment, automation, and load balancing, forming a strong foundation for scalable web infrastructure design.